Ukraine’s AI Drone Revolution Hits Hardware Reality: Full Autonomy Remains Years Away Despite Battlefield Success

Check out the Best Deals on Amazon for DJI Drones today!

Ukraine’s rapid advances in AI-powered drone warfare are running into a fundamental obstacle that no amount of software innovation can overcome: the physical limits of what computing power a small drone can carry into battle.

A comprehensive investigation by the Kyiv Independent reveals that despite deploying thousands of AI-enabled systems and achieving dramatic improvements in strike accuracy, fully autonomous drones remain “well out of reach” due to hardware constraints rather than software limitations. The reality check comes as Ukraine’s drone developers work to compensate for a roughly 3-to-1 manpower disadvantage against Russia.

The Computing Power Problem No One Talks About

While consumer AI applications like ChatGPT hide their massive computing requirements in distant data centers, battlefield drones face a cruel paradox: AI autonomy is most needed precisely when connections to operators—and cloud computing—are severed by electronic warfare.

“We’re not going along the route of that full autonomy,” Andriy Chulyk, co-founder of Sine Engineering, told the Kyiv Independent. “Tesla, for example, having enormous, colossal resources, has been working on self-driving for ten years and — unfortunately — they still haven’t made a product that a person can be sure of.”

The comparison to Tesla’s struggles is particularly telling. If a company with virtually unlimited resources cannot perfect autonomous navigation for ground vehicles after a decade, the challenge of cramming similar capabilities into a 10-inch (25cm) drone operating in contested airspace becomes clear.

“The main role of AI in the nearest future on the real battlefield will be just a kind of supportive function rather than replacing humans,” Kate Bondar, senior fellow at the Center for Strategic and International Studies, told the publication.

What’s Actually Working: Incremental Gains Over Revolutionary Breakthroughs

Ukraine’s real AI success story isn’t about autonomous killer robots—it’s about making human pilots more effective through “last-mile targeting” systems that maintain target locks even when radio connections fail.

The Fourth Law, founded by serial entrepreneur Yaroslav Azhnyuk, produces AI vision modules costing approximately $70 that have demonstrated remarkable results. Preliminary data from one brigade showed hit rates improving from 20% to 80%—a fourfold increase achieved by enhancing the drone’s ability to track targets through shadows and tree cover rather than just locking onto pixels.

The company’s TFL-1 autonomy module increases mission success rates by 2-5 times while adding only 10-20% to unit costs. These modules handle the critical final 500 meters (1,640 feet) of flight where electronic warfare is most intense, but a human pilot still selects the target and initiates the mission.

Speaking with the Kyiv Independent, Azhnyuk acknowledged that full autonomy in one-off demonstrations would already be feasible, but “massive deployment” remains “a whole different challenge, one that’s nowhere in sight.”

Three Use Cases Show AI’s Current Limits

Interceptor Drones: Still Pilot-Dependent

Ukrainian developers are testing AI-enhanced interceptors to counter Russian Shahed-type drones that attack cities nightly. Given Ukraine’s size and the speed of newer jet-powered Shaheds, these interceptors need coverage across vast territory with minimal operators.

Azhnyuk, who also runs Odd Systems (currently testing anti-Shahed interceptors), noted that “Ukrainian solutions currently lack the AI part” compared to systems like Merops from Eric Schmidt’s company. Current interceptors still rely on skilled FPV pilots manually flying high-speed pursuits—a limitation driven by hardware constraints rather than software sophistication.

FPV Strike Drones: Better Targeting, Not Independence

“Last-mile targeting” has become fairly standard across Ukraine’s FPV fleet, functioning similarly to autofocus on consumer cameras. A pilot selects a target while maintaining video connection, and the drone’s AI maintains that lock after jamming breaks the connection.

This represents significant progress but remains far from revolutionary autonomy. The neural networks must run on computers small and light enough for drones to carry, limiting the complexity of decision-making possible.

Deep-Strike Missions: Humans Keep Control for Ethical Reasons

A recent partnership between Shield AI and Ukrainian manufacturer Iron Belly demonstrates both the potential and the intentional limits of autonomous systems.

In field tests, Shield AI’s V-BAT reconnaissance drone (costing approximately $1 million with 13-hour loiter time) scouts targets and captures detailed imagery from multiple angles. It then passes this data to the D4, a cheaper kamikaze drone using onboard AI to navigate back to the target using visual landmarks—extending “last-mile targeting” to 62 miles (100km) or more.

The system showed resilience when light rain temporarily sent a D4 off course during testing. Operators let the drone self-correct without touching controls, and it eventually found its target after 20 minutes. Production currently runs at 500-1,000 units monthly, with plans to scale to 1,000.

But there’s a crucial human-in-the-loop requirement: Ukraine will not allow deep-strike drones autonomous target selection because they spend too much time flying over Russian cities and civilian areas. The ethical guardrail is working exactly as intended.

The Data Quality Problem

Beyond computing hardware, Ukraine faces a data challenge. While the country has accumulated over 2 million hours of combat drone footage since February 2022, much comes from “mostly analog, cheap cameras,” according to Bondar.

“Yes, it will be able to distinguish between a tank and a human. It will identify that it’s a tank or it’s a vehicle or that it’s a human. But not more than that,” Bondar explained. “The problem of, for example, distinguishing between a Russian and Ukrainian soldier, or even a soldier and a civilian, has not been solved.”

Much of Ukraine’s visual AI relies on open-source software like YOLOv8 (You Only Look Once). While economical for mass deployment, it’s unlikely to match proprietary systems developed by companies with greater resources.

Marketing Hype vs. Battlefield Reality

Bondar offered a sobering assessment of AI claims in the drone industry: “War’s also a business. You have to sell something, you have to be competitive, and to be competitive you have to have an advantage. To have AI-enabled software, or some cool AI system, that’s something that sounds really cool and sexy.”

Ukraine’s Commander-in-Chief Oleksandr Syrskyi acknowledged this limitation in an August interview with RBC-Ukraine:

”(AI) is in use virtually everywhere, but you have to take into account that it can make mistakes. Virtually all of our technological weapons have elements of artificial intelligence.”

The Ukrainian military loses roughly 10,000 drones monthly to electronic warfare and other factors—a staggering attrition rate that AI enhancements have slowed but not eliminated.

DroneXL’s Take

This investigation from the Kyiv Independent provides crucial context for the avalanche of AI drone announcements we’ve covered over the past two years. From The Fourth Law’s hit rate improvements to the Pentagon’s $50 million Skynode S deployment, the narrative has focused on successes—and those successes are real.

But this reality check reveals something equally important: Ukraine’s drone developers aren’t chasing fully autonomous weapons because they can’t build small computers powerful enough to make reliable life-and-death decisions. The Tesla comparison is particularly apt—if autonomous driving remains unsolved after 10 years and billions in investment, battlefield autonomy faces even steeper challenges.

What’s remarkable is how Ukraine has turned this hardware limitation into a strategic advantage through incremental innovation. Rather than waiting for breakthrough AI that may never arrive in useful form factors, developers like The Fourth Law focused on solving the specific problem of maintaining target locks through jamming and visual obstructions. The result: 2-5x mission success improvements for minimal cost increases.

This approach aligns with Ukraine’s broader pattern of beating NATO procurement cycles through necessity-driven iteration. While Western defense contractors plan five-year development timelines, Ukrainian engineers test, fail, adapt, and deploy within weeks. The constraint isn’t lack of vision—it’s physics, budgets, and the urgent need for solutions that work today rather than promises of perfection tomorrow.

The ethical dimension deserves attention too. Ukraine’s decision to maintain human control over deep-strike drones flying over civilian areas shows that battlefield necessity hasn’t overridden moral considerations. As AI-powered drone swarms become increasingly feasible, this restraint offers a model other nations should study.

What do you think about the gap between AI drone hype and battlefield reality? Share your thoughts in the comments below.

Discover more from DroneXL.co

Subscribe to get the latest posts sent to your email.

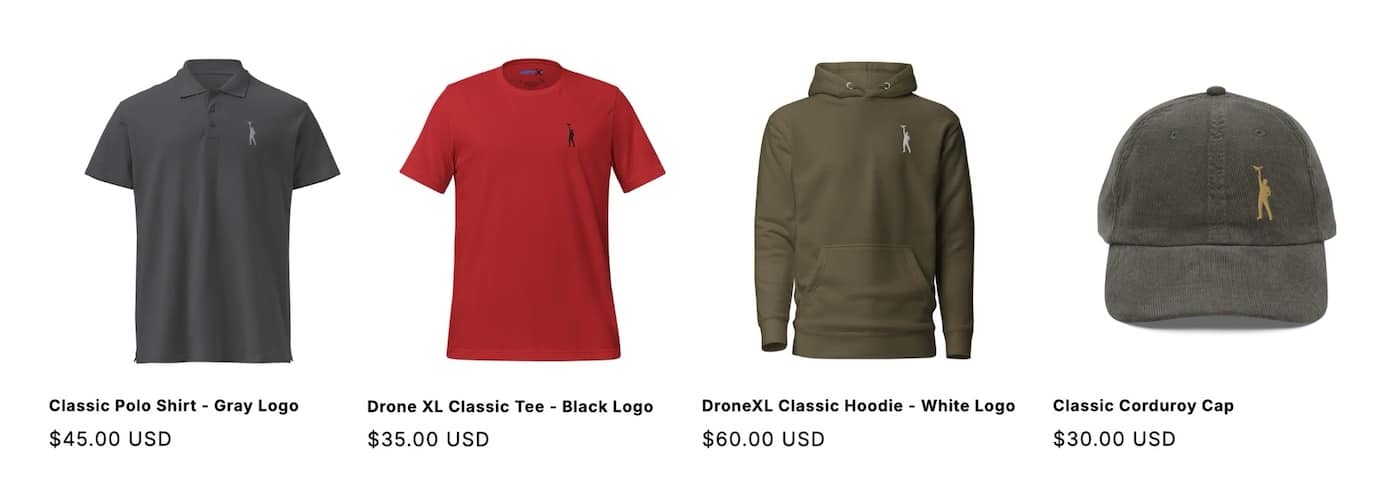

Check out our Classic Line of T-Shirts, Polos, Hoodies and more in our new store today!

MAKE YOUR VOICE HEARD

Proposed legislation threatens your ability to use drones for fun, work, and safety. The Drone Advocacy Alliance is fighting to ensure your voice is heard in these critical policy discussions.Join us and tell your elected officials to protect your right to fly.

Get your Part 107 Certificate

Pass the Part 107 test and take to the skies with the Pilot Institute. We have helped thousands of people become airplane and commercial drone pilots. Our courses are designed by industry experts to help you pass FAA tests and achieve your dreams.

Copyright © DroneXL.co 2026. All rights reserved. The content, images, and intellectual property on this website are protected by copyright law. Reproduction or distribution of any material without prior written permission from DroneXL.co is strictly prohibited. For permissions and inquiries, please contact us first. DroneXL.co is a proud partner of the Drone Advocacy Alliance. Be sure to check out DroneXL's sister site, EVXL.co, for all the latest news on electric vehicles.

FTC: DroneXL.co is an Amazon Associate and uses affiliate links that can generate income from qualifying purchases. We do not sell, share, rent out, or spam your email.

It’s sad that the Russians aren’t as concern about civilians as the Ukrainians.

NVIDIA Jetson series are more than adequate for the computing task. Note that Russia is already fielding fully autonomous V2U ‘killer robot’ drones with NVIDIA hardware. Whether it is ethical or not is another matter. Also, fiber optic guidance means the expensive AI hardware can stay in the control unit and be reused.